In Unity, one of the most fundamental concepts for building interactive experiences is understanding game objects and components. Game objects are the basic building blocks of a scene, representing entities in the virtual world, while components define the behavior and functionality of these game objects. Let’s delve deeper into this concept and explore how game objects and components work together in Unity.

Game Objects: A game object can be thought of as an empty container that holds various components. It serves as a representation of a tangible or intangible object in the game world. For example, a game object could represent a player character, an enemy, a collectible item, a light source, or even an empty point in space. Each game object can have a unique combination of components, enabling them to have distinct behaviors and properties.

Components: Components are the building blocks that give game objects functionality. They define how a game object interacts with the game world, responds to user input, and behaves in general. Unity provides a wide range of built-in components that cover common functionalities such as rendering, physics simulation, audio playback, animation, and more. Additionally, you can create your own custom components to extend Unity’s capabilities and suit your specific game requirements.

By attaching components to a game object, you define its behavior and appearance. For example, adding a “Mesh Renderer” component allows the game object to be visible and rendered on the screen, while a “Box Collider” component enables collision detection with other objects in the game world. Components can also interact with each other, allowing complex and intricate behaviors to be created by combining different functionalities.

Component-Based Architecture: Unity follows a component-based architecture, which promotes modularity and reusability. Instead of inheriting behavior from a single class hierarchy, like in traditional object-oriented programming, Unity’s component-based system allows you to compose game objects by attaching and combining various components. This architecture provides flexibility and enables easy modification and extension of game objects without rewriting or reorganizing existing code.

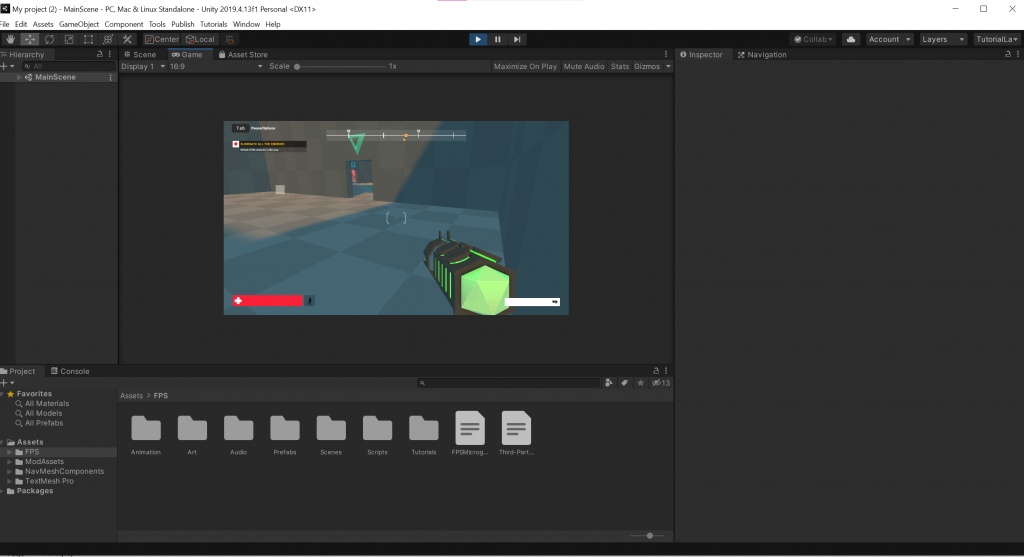

To interact with components, you can use Unity’s editor interface or write scripts that access and manipulate component properties and methods programmatically. For example, you can use scripts to control a character’s movement by modifying its transform component, respond to user input through an input component, or trigger specific actions by accessing a custom script attached to a game object.

Conclusion: Understanding game objects and components is crucial when working with Unity. Game objects serve as the basic entities within the game world, while components define their behavior and functionality. By combining and configuring different components, you can create complex and interactive experiences. Unity’s component-based architecture provides a modular and flexible approach to game development, allowing for easy customization and extension of game objects. With this knowledge, you’ll be well-equipped to build and design interactive scenes in Unity.

Interactions between Game Objects and Components

In Unity, game objects and components interact with each other to create dynamic and interactive gameplay experiences. Understanding how these interactions work is key to developing engaging games. Let’s explore the various ways game objects and components can interact in Unity.

- Component Attachment: Game objects can have multiple components attached to them. By attaching different components to a game object, you define its behavior and functionality. For example, attaching a “Rigidbody” component to a game object enables physics simulation, allowing it to respond to forces like gravity and collisions with other objects. Each component contributes to the overall behavior of the game object and can be configured individually.

- Component Communication: Components can communicate with each other to exchange information or trigger actions. This communication can occur directly between components attached to the same game object or between components attached to different game objects. For example, a “PlayerController” script attached to a player character may interact with a “Health” component to decrease the player’s health when they take damage.

- Dependency and Execution Order: Components may have dependencies on other components or require specific execution orders. Unity provides a way to define execution order using the “Script Execution Order” setting in the project settings. This allows you to ensure that components are executed in the correct order to avoid conflicts or unintended behaviors. By specifying dependencies, you can control the initialization and execution order of components to maintain consistency and avoid unexpected results.

- Event System: Unity’s event system enables components to communicate and respond to events such as button clicks, key presses, or mouse movements. Components can subscribe to events and define callback functions to execute when the event occurs. This allows for dynamic and responsive gameplay. For example, a “Button” component can trigger an event when clicked, and other components can respond to that event by executing specific actions.

- Scripting: Components can be manipulated and controlled through scripts written in Unity’s scripting languages such as C#. By accessing component properties and methods, you can modify their behavior during runtime. Scripts can also be used to handle user input, control animations, manage game logic, and more. Scripting provides a powerful way to interact with components and implement custom behaviors that are unique to your game.

- Prefabs: Unity’s prefab system allows you to create reusable game object templates. Prefabs can include predefined combinations of components, making it easy to instantiate multiple instances of similar game objects with consistent behavior. Modifying a prefab automatically updates all instances of that prefab in the scene, ensuring consistency and reducing redundancy.

These are just a few examples of the interactions between game objects and components in Unity. As a game developer, you have the freedom to design and implement a wide range of interactions to create unique and immersive gameplay experiences. By leveraging Unity’s flexible component-based architecture and scripting capabilities, you can build complex interactions that bring your game to life.

Examples and Practical Implementation

Let’s explore some practical examples of game objects and components in Unity and how they can be implemented in a game development scenario.

- Player Character: A common example is a player character controlled by the player. The player character can be represented by a game object with various components attached to it:

- A “CharacterController” component to handle player movement, such as walking, running, jumping, and crouching.

- A “Camera” component to provide a first-person or third-person perspective.

- An “Animator” component to control character animations, such as walking, attacking, or interacting with objects.

- A “Health” component to manage the player’s health and respond to damage events.

- A “Collision” component to detect and respond to collisions with other objects in the game world.

- A “Script” component to handle player input, such as keyboard or controller inputs, and control the character’s behavior accordingly.

- Interactive Objects: Game objects can also represent interactive objects in the game world, such as doors, buttons, or collectibles. These objects can have components attached to enable specific interactions:

- A “Trigger” component to detect when the player enters a certain area, such as a trigger zone near a door.

- An “Animation” component to play an animation when triggered, such as opening or closing a door.

- A “Sound” component to play a sound effect when the object is interacted with.

- A “Script” component to define custom logic for the object’s behavior, such as activating a puzzle or spawning enemies when the player interacts with it.

- Enemies: Enemies or non-player characters (NPCs) in a game can also be represented by game objects with various components attached:

- A “Navigation” component to handle pathfinding and movement of the enemy NPC.

- A “Combat” component to manage the enemy’s combat behavior, such as attacking, defending, or fleeing.

- An “AI” component to control the NPC’s decision-making process, determining its actions based on player proximity, health, or other factors.

- A “Health” component to manage the enemy’s health and respond to damage events.

- A “Script” component to define custom logic for the enemy’s behavior, such as patrol routes, special attacks, or interacting with the game world.

- Environment: Game objects can also represent elements of the game environment, such as terrain, buildings, or obstacles. These objects may have components like:

- A “Mesh Renderer” component to render the visual representation of the object.

- A “Collider” component to enable collision detection with other objects.

- A “Physics” component to simulate realistic physics interactions, such as gravity, object physics, or destruction.

- A “Script” component to define custom logic for the environment, such as triggering events when the player interacts with it, changing its appearance based on gameplay events, or generating procedural terrain.

These examples demonstrate how game objects and components work together in Unity to create interactive and dynamic gameplay experiences. By combining and configuring various components, you can define the behavior, appearance, and functionality of game objects, resulting in immersive and engaging games. Unity’s flexibility and extensive component library empower developers to bring their creative ideas to life and craft unique gaming experiences.

Applying components to create gameplay mechanics or visual effects

- Gameplay Mechanics:

a) Physics-Based Movement: By applying the “Rigidbody” component to a game object, you can create physics-based movement. This component allows the object to respond to forces like gravity, collisions, and external impacts. By adjusting parameters like mass, drag, and constraints, you can simulate realistic movement for characters, vehicles, or objects in your game.

b) Animation and State Machines: Unity’s “Animator” component is powerful for creating complex animations and controlling gameplay mechanics. By defining animation states, transitions, and conditions, you can create character movement, combat systems, or interactive object behaviors. The “Animator” component can be used alongside scripting to control the flow of animations based on gameplay events or user input.

c) User Input and Controls: Unity provides input components like “InputManager” and “EventSystem” that handle user input. By capturing keyboard, mouse, or controller inputs, you can create control schemes for character movement, menu navigation, or triggering actions. Combining input components with scripting allows you to design responsive and intuitive controls for your game.

d) Audio and Sound Effects: The “AudioSource” component in Unity allows you to add sound effects to various gameplay events. By attaching this component to a game object, you can play sounds on collisions, interactions, or in response to player actions. Adjusting properties like volume, pitch, and spatial blend enhances the immersive experience and provides audio feedback to the player.

- Visual Effects:

a) Particle Systems: Unity’s “ParticleSystem” component enables the creation of visual effects like explosions, fire, smoke, and magical spells. By adjusting parameters such as emission rate, shape, color, and lifetime, you can design stunning particle effects to enhance gameplay. Particle systems can be triggered by specific events or continuously emit particles for ambient effects.

b) Post-Processing Effects: Unity’s post-processing stack allows the application of various visual effects to the entire scene or specific camera views. By adding the “PostProcessLayer” and “PostProcessVolume” components, you can adjust settings for effects like depth of field, motion blur, color grading, and ambient occlusion. These effects add depth, realism, and artistic flair to your game’s visuals.

c) Shaders and Materials: Unity’s “Shader” and “Material” components enable advanced customization of an object’s appearance. Shaders define how light interacts with objects, creating effects like reflections, refractions, or distortions. Materials define the visual properties of an object, such as its texture, color, and transparency. By creating custom shaders or modifying existing ones, you can achieve unique visual styles or special effects for your game.

d) Cameras and Camera Effects: Unity’s “Camera” component allows you to control the view and perspective of your game. By adjusting properties like field of view, depth of field, or camera positioning, you can create cinematic experiences or unique gameplay perspectives. Additionally, Unity provides camera effects like bloom, vignetting, and color grading, which further enhance the visual quality and atmosphere of your game.

By applying these components creatively and combining them with scripting and other Unity features, you can create a wide range of gameplay mechanics and stunning visual effects. Unity’s extensive component library and flexibility empower game developers to design immersive and captivating experiences that engage players and bring their game worlds to life.

Conclusion

Understanding game objects and components is fundamental to developing games in Unity. Game objects serve as the building blocks of a scene, representing entities in the virtual world, while components define their behavior and functionality. By attaching components to game objects, you can create interactive and dynamic gameplay experiences.

Game objects in Unity can represent player characters, enemies, interactive objects, or elements of the game environment. Each game object can have a unique combination of components that determine its behavior, appearance, and interaction with the game world. Unity provides a wide range of built-in components for rendering, physics simulation, audio playback, animation, and more. Additionally, developers can create custom components to extend Unity’s capabilities and tailor them to specific game requirements.

Components in Unity allow for modularity and reusability, following a component-based architecture. Rather than relying on a single class hierarchy, components can be attached and combined to create complex behaviors and functionalities. Components can communicate with each other, respond to events, and be controlled through scripts, allowing for flexible and customizable gameplay mechanics.

By leveraging Unity’s component-based system, developers can create player movement, combat systems, physics-based interactions, animation controllers, and more. Visual effects like particle systems, post-processing effects, shaders, and materials can also be applied to enhance the game’s visuals and create immersive experiences.

Understanding game objects and components empowers game developers to design and implement interactive and engaging gameplay mechanics, as well as visually stunning effects. Unity’s flexible component-based architecture, along with its extensive library of built-in components and scripting capabilities, provides the tools needed to bring creative ideas to life and build unique gaming experiences.